K8S部署rocketmq5全过程

202人参与 • 2025-02-15 • 云虚拟主机

背景

需要在开发环境部署rocketmq5验证新版本proxy相关的特性,而开发环境没有helm和外网,有k8s的环境

发现网上也没有太多资料,记录一下操作流程

操作流程

1. helm库拉取rocketmq5镜像

用的是某个大佬上传的helm库镜像:

## 添加 helm 仓库 helm repo add rocketmq-repo https://helm-charts.itboon.top/rocketmq helm repo update rocketmq-repo ## 查看镜像 helm search rocketmq 拉取镜像到本地 两个都拉 helm pull itboon/rocketmq helm pull itboon/rocketmq-cluster 解压 tar -zxf rocketmq.tgz

2. 单集群启动测试

进入目录修改value.yaml文件:

clustername: "rocketmq-helm"

image:

repository: "apache/rocketmq"

pullpolicy: ifnotpresent

tag: "5.3.0"

podsecuritycontext:

fsgroup: 3000

runasuser: 3000

broker:

size:

master: 1

replica: 0

# podsecuritycontext: {}

# containersecuritycontext: {}

master:

brokerrole: async_master

jvm:

maxheapsize: 256m

# javaoptsoverride: ""

resources:

limits:

cpu: 2

memory: 512mi

requests:

cpu: 200m

memory: 256mi

replica:

jvm:

maxheapsize: 256m

# javaoptsoverride: ""

resources:

limits:

cpu: 4

memory: 512mi

requests:

cpu: 50m

memory: 256mi

hostnetwork: false

persistence:

enabled: true

size: 100mi

#storageclass: "local-storage"

aclconfigmapenabled: false

aclconfig: |

globalwhiteremoteaddresses:

- '*'

- 10.*.*.*

- 192.168.*.*

config:

## brokerclustername brokername brokerrole brokerid 由内置脚本自动生成

deletewhen: "04"

filereservedtime: "48"

flushdisktype: "async_flush"

waittimemillsinsendqueue: "1000"

# aclenable: true

affinityoverride: {}

tolerations: []

nodeselector: {}

## broker.readinessprobe

readinessprobe:

tcpsocket:

port: main

initialdelayseconds: 10

periodseconds: 10

timeoutseconds: 3

failurethreshold: 6

nameserver:

replicacount: 1

jvm:

maxheapsize: 256m

# javaoptsoverride: ""

resources:

limits:

cpu: 2

memory: 256mi

ephemeral-storage: 256mi

requests:

cpu: 100m

memory: 256mi

ephemeral-storage: 256mi

persistence:

enabled: false

size: 256mi

#storageclass: "local-storage"

affinityoverride: {}

tolerations: []

nodeselector: {}

## nameserver.readinessprobe

readinessprobe:

tcpsocket:

port: main

initialdelayseconds: 10

periodseconds: 10

timeoutseconds: 3

failurethreshold: 6

## nameserver.service

service:

annotations: {}

type: clusterip

proxy:

enabled: true

replicacount: 1

jvm:

maxheapsize: 600m

# javaoptsoverride: ""

resources:

limits:

cpu: 2

memory: 512mi

requests:

cpu: 100m

memory: 256mi

affinityoverride: {}

tolerations: []

nodeselector: {}

## proxy.readinessprobe

readinessprobe:

tcpsocket:

port: main

initialdelayseconds: 10

periodseconds: 10

timeoutseconds: 3

failurethreshold: 6

## proxy.service

service:

annotations: {}

type: clusterip

dashboard:

enabled: true

replicacount: 1

image:

repository: "apacherocketmq/rocketmq-dashboard"

pullpolicy: ifnotpresent

tag: "1.0.0"

auth:

enabled: true

users:

- name: admin

password: admin

isadmin: true

- name: user01

password: userpass

jvm:

maxheapsize: 256m

resources:

limits:

cpu: 1

memory: 512mi

requests:

cpu: 20m

memory: 512mi

## dashboard.readinessprobe

readinessprobe:

failurethreshold: 6

httpget:

path: /

port: http

livenessprobe: {}

service:

annotations: {}

type: clusterip

# nodeport: 31007

ingress:

enabled: false

classname: ""

annotations: {}

# nginx.ingress.kubernetes.io/whitelist-source-range: 10.0.0.0/8,124.160.30.50

hosts:

- host: rocketmq-dashboard.example.com

tls: []

# - secretname: example-tls

# hosts:

# - rocketmq-dashboard.example.com

## controller mode is an experimental feature

controllermodeenabled: false

controller:

enabled: false

jvm:

maxheapsize: 256m

# javaoptsoverride: ""

resources:

limits:

cpu: 2

memory: 512mi

requests:

cpu: 100m

memory: 256mi

persistence:

enabled: true

size: 256mi

accessmodes:

- readwriteonce

## controller.service

service:

annotations: {}

## controller.config

config:

controllerdlegergroup: group1

enableelectuncleanmaster: false

notifybrokerrolechanged: true

## controller.readinessprobe

readinessprobe:

tcpsocket:

port: main

initialdelayseconds: 10

periodseconds: 10

timeoutseconds: 3

failurethreshold: 6helm启动

helm upgrade --install rocketmq \ --namespace rocketmq-demo \ --create-namespace \ --set broker.persistence.enabled="false" \ ./rocketmq

3. sc/pv配置

采用的挂载本地的方式设置:

sc:

vi sc_local.yaml

apiversion: storage.k8s.io/v1

kind: storageclass

metadata:

name: local-storage

annotations:

openebs.io/cas-type: local

storageclass.kubernetes.io/is-default-class: "false"

cas.openebs.io/config: |

#hostpath type will create a pv by

# creating a sub-directory under the

# basepath provided below.

- name: storagetype

value: "hostpath"

#specify the location (directory) where

# where pv(volume) data will be saved.

# a sub-directory with pv-name will be

# created. when the volume is deleted,

# the pv sub-directory will be deleted.

#default value is /var/openebs/local

- name: basepath

value: "/tmp/storage"

provisioner: openebs.io/local

volumebindingmode: immediate

reclaimpolicy: retain

kubectl apply -f sc_local.yamlpv(只broker):

vi local_pv.yaml

apiversion: v1

kind: persistentvolume

metadata:

labels:

type: local

name: broker-storage-rocketmq-broker-master-0

namespace: rocketmq-demo

spec:

accessmodes:

- readwriteonce

capacity:

storage: 100mi

hostpath:

path: /tmp/storage

persistentvolumereclaimpolicy: recycle

storageclassname: local-storage

volumemode: filesystem

---

apiversion: v1

kind: persistentvolume

metadata:

labels:

type: local

name: broker-storage-rocketmq-broker-replica-id1-0

namespace: rocketmq-demo

spec:

accessmodes:

- readwriteonce

capacity:

storage: 100mi

hostpath:

path: /tmp/storageslave

persistentvolumereclaimpolicy: recycle

storageclassname: local-storage

volumemode: filesystem

kubectl apply -f local_pv.yaml

kubectl delete pv --all 4.集群启动测试

修改value.yaml,主要降低了配置:

clustername: "rocketmq-helm"

nameoverride: rocketmq

image:

repository: "apache/rocketmq"

pullpolicy: ifnotpresent

tag: "5.3.0"

podsecuritycontext:

fsgroup: 3000

runasuser: 3000

broker:

size:

master: 1

replica: 1

# podsecuritycontext: {}

# containersecuritycontext: {}

master:

brokerrole: async_master

jvm:

maxheapsize: 512m

# javaoptsoverride: ""

resources:

limits:

cpu: 2

memory: 512mi

requests:

cpu: 100m

memory: 128mi

replica:

jvm:

maxheapsize: 256m

# javaoptsoverride: ""

resources:

limits:

cpu: 2

memory: 256mi

requests:

cpu: 50m

memory: 128mi

hostnetwork: false

persistence:

enabled: true

size: 100mi

#storageclass: "local-storage"

aclconfigmapenabled: false

aclconfig: |

globalwhiteremoteaddresses:

- '*'

- 10.*.*.*

- 192.168.*.*

config:

## brokerclustername brokername brokerrole brokerid 由内置脚本自动生成

deletewhen: "04"

filereservedtime: "48"

flushdisktype: "async_flush"

waittimemillsinsendqueue: "1000"

# aclenable: true

affinityoverride: {}

tolerations: []

nodeselector: {}

## broker.readinessprobe

readinessprobe:

tcpsocket:

port: main

initialdelayseconds: 10

periodseconds: 10

timeoutseconds: 3

failurethreshold: 6

nameserver:

replicacount: 1

jvm:

maxheapsize: 256m

# javaoptsoverride: ""

resources:

limits:

cpu: 1

memory: 256mi

ephemeral-storage: 256mi

requests:

cpu: 100m

memory: 128mi

ephemeral-storage: 128mi

persistence:

enabled: false

size: 128mi

#storageclass: "local-storage"

affinityoverride: {}

tolerations: []

nodeselector: {}

## nameserver.readinessprobe

readinessprobe:

tcpsocket:

port: main

initialdelayseconds: 10

periodseconds: 10

timeoutseconds: 3

failurethreshold: 6

## nameserver.service

service:

annotations: {}

type: clusterip

proxy:

enabled: true

replicacount: 2

jvm:

maxheapsize: 512m

# javaoptsoverride: ""

resources:

limits:

cpu: 2

memory: 512mi

requests:

cpu: 100m

memory: 256mi

affinityoverride: {}

tolerations: []

nodeselector: {}

## proxy.readinessprobe

readinessprobe:

tcpsocket:

port: main

initialdelayseconds: 10

periodseconds: 10

timeoutseconds: 3

failurethreshold: 6

## proxy.service

service:

annotations: {}

type: clusterip

dashboard:

enabled: false

replicacount: 1

image:

repository: "apacherocketmq/rocketmq-dashboard"

pullpolicy: ifnotpresent

tag: "1.0.0"

auth:

enabled: true

users:

- name: admin

password: admin

isadmin: true

- name: user01

password: userpass

jvm:

maxheapsize: 256m

resources:

limits:

cpu: 1

memory: 256mi

requests:

cpu: 20m

memory: 128mi

## dashboard.readinessprobe

readinessprobe:

failurethreshold: 6

httpget:

path: /

port: http

livenessprobe: {}

service:

annotations: {}

type: clusterip

# nodeport: 31007

ingress:

enabled: false

classname: ""

annotations: {}

# nginx.ingress.kubernetes.io/whitelist-source-range: 10.0.0.0/8,124.160.30.50

hosts:

- host: rocketmq-dashboard.example.com

tls: []

# - secretname: example-tls

# hosts:

# - rocketmq-dashboard.example.com

## controller mode is an experimental feature

controllermodeenabled: false

controller:

enabled: false

replicacount: 3

jvm:

maxheapsize: 256m

# javaoptsoverride: ""

resources:

limits:

cpu: 2

memory: 256mi

requests:

cpu: 100m

memory: 128mi

persistence:

enabled: true

size: 128mi

accessmodes:

- readwriteonce

## controller.service

service:

annotations: {}

## controller.config

config:

controllerdlegergroup: group1

enableelectuncleanmaster: false

notifybrokerrolechanged: true

## controller.readinessprobe

readinessprobe:

tcpsocket:

port: main

initialdelayseconds: 10

periodseconds: 10

timeoutseconds: 3

failurethreshold: 65.离线安装

helm导出yaml文件:

helm template rocketmq ./rocketmq-cluster --output-dir ./rocketmq-cluster-yaml

注意,转成yaml文件后,原本用helm设置的namespace没了。

执行yaml文件验证:

kubectl apply -f rocketmq-cluster-yaml/ --recursive kubectl delete -f rocketmq-cluster-yaml/ --recursive

yaml导出:

## 安装传输工具 yum install lrzsz ## 打包yaml文件夹 tar czvf folder.tar.gz itboon sz folder.tar.gz

附录

最后生成的部署yaml:

- nameserver

---

# source: rocketmq-cluster/templates/nameserver/statefulset.yaml

apiversion: apps/v1

kind: statefulset

metadata:

name: "rocketmq-nameserver"

namespace: rocketmq

labels:

helm.sh/chart: rocketmq-cluster-12.3.2

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

app.kubernetes.io/version: "5.3.0"

app.kubernetes.io/managed-by: helm

spec:

minreadyseconds: 20

replicas: 1

podmanagementpolicy: parallel

selector:

matchlabels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: nameserver

servicename: "rocketmq-nameserver-headless"

template:

metadata:

annotations:

checksum/config: 9323bc706d85f980c210e9823264a63548598b649c4935f9db6559d4fecbcc93

labels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: nameserver

spec:

affinity:

podantiaffinity:

preferredduringschedulingignoredduringexecution:

- weight: 5

podaffinityterm:

labelselector:

matchlabels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: nameserver

topologykey: kubernetes.io/hostname

securitycontext:

fsgroup: 3000

runasuser: 3000

containers:

- name: nameserver

image: "apache/rocketmq:5.3.0"

imagepullpolicy: ifnotpresent

command:

- sh

- /mq-server-start.sh

env:

- name: rocketmq_process_role

value: nameserver

- name: rocketmq_java_options_heap

value: -xms512m -xmx512m

ports:

- containerport: 9876

name: main

protocol: tcp

resources:

limits:

cpu: 1

ephemeral-storage: 512mi

memory: 512mi

requests:

cpu: 100m

ephemeral-storage: 256mi

memory: 256mi

readinessprobe:

failurethreshold: 6

initialdelayseconds: 10

periodseconds: 10

tcpsocket:

port: main

timeoutseconds: 3

lifecycle:

prestop:

exec:

command: ["sh", "-c", "sleep 5; ./mqshutdown namesrv"]

volumemounts:

- mountpath: /mq-server-start.sh

name: mq-server-start-sh

subpath: mq-server-start.sh

- mountpath: /etc/rocketmq/base-cm

name: base-cm

- mountpath: /home/rocketmq/logs

name: nameserver-storage

subpath: logs

dnspolicy: clusterfirst

terminationgraceperiodseconds: 15

volumes:

- configmap:

items:

- key: mq-server-start.sh

path: mq-server-start.sh

name: rocketmq-server-config

defaultmode: 0755

name: mq-server-start-sh

- configmap:

name: rocketmq-server-config

name: base-cm

- name: nameserver-storage

emptydir: {}

---

# source: rocketmq-cluster/templates/nameserver/svc.yaml

apiversion: v1

kind: service

metadata:

name: rocketmq-nameserver

labels:

helm.sh/chart: rocketmq-cluster-12.3.2

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

app.kubernetes.io/version: "5.3.0"

app.kubernetes.io/managed-by: helm

component: nameserver

spec:

ports:

- port: 9876

protocol: tcp

targetport: 9876

selector:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: nameserver

type: "clusterip"

---

# source: rocketmq-cluster/templates/nameserver/svc-headless.yaml

apiversion: v1

kind: service

metadata:

name: "rocketmq-nameserver-headless"

labels:

helm.sh/chart: rocketmq-cluster-12.3.2

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

app.kubernetes.io/version: "5.3.0"

app.kubernetes.io/managed-by: helm

component: nameserver

spec:

clusterip: "none"

publishnotreadyaddresses: true

ports:

- port: 9876

protocol: tcp

targetport: 9876

selector:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: nameserver

- broker

---

# source: rocketmq-cluster/templates/broker/statefulset.yaml

apiversion: apps/v1

kind: statefulset

metadata:

name: rocketmq-broker-master

namespace: rocketmq

labels:

helm.sh/chart: rocketmq-cluster-12.3.2

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

app.kubernetes.io/version: "5.3.0"

app.kubernetes.io/managed-by: helm

spec:

minreadyseconds: 20

replicas: 1

podmanagementpolicy: orderedready

selector:

matchlabels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: broker

broker: rocketmq-broker-master

servicename: ""

template:

metadata:

annotations:

checksum/config: 9323bc706d85f980c210e9823264a63548598b649c4935f9db6559d4fecbcc93

labels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: broker

broker: rocketmq-broker-master

spec:

affinity:

podantiaffinity:

preferredduringschedulingignoredduringexecution:

- weight: 5

podaffinityterm:

labelselector:

matchlabels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: broker

topologykey: kubernetes.io/hostname

securitycontext:

fsgroup: 3000

runasuser: 3000

containers:

- name: broker

image: "apache/rocketmq:5.3.0"

imagepullpolicy: ifnotpresent

command:

- sh

- /mq-server-start.sh

env:

- name: my_pod_name

valuefrom:

fieldref:

fieldpath: metadata.name

- name: rocketmq_process_role

value: broker

- name: namesrv_addr

value: rocketmq-nameserver-0.rocketmq-nameserver-headless.rocketmq.svc:9876

- name: rocketmq_conf_brokerid

value: "0"

- name: rocketmq_conf_brokerrole

value: "async_master"

- name: rocketmq_java_options_heap

value: -xms1g -xmx1g

ports:

- containerport: 10909

name: vip

protocol: tcp

- containerport: 10911

name: main

protocol: tcp

- containerport: 10912

name: ha

protocol: tcp

resources:

limits:

cpu: 2

memory: 2gi

requests:

cpu: 100m

memory: 512mi

readinessprobe:

failurethreshold: 6

initialdelayseconds: 10

periodseconds: 10

tcpsocket:

port: main

timeoutseconds: 3

lifecycle:

prestop:

exec:

command: ["sh", "-c", "sleep 5; ./mqshutdown broker"]

volumemounts:

- mountpath: /home/rocketmq/logs

name: broker-storage

subpath: rocketmq-broker/logs

- mountpath: /home/rocketmq/store

name: broker-storage

subpath: rocketmq-broker/store

- mountpath: /etc/rocketmq/broker-base.conf

name: broker-base-config

subpath: broker-base.conf

- mountpath: /mq-server-start.sh

name: mq-server-start-sh

subpath: mq-server-start.sh

dnspolicy: clusterfirst

terminationgraceperiodseconds: 30

volumes:

- configmap:

items:

- key: broker-base.conf

path: broker-base.conf

name: rocketmq-server-config

name: broker-base-config

- configmap:

items:

- key: mq-server-start.sh

path: mq-server-start.sh

name: rocketmq-server-config

defaultmode: 0755

name: mq-server-start-sh

volumeclaimtemplates:

- metadata:

name: broker-storage

spec:

accessmodes:

- readwriteonce

storageclassname: local-path

resources:

requests:

storage: "100mi"

---

# source: rocketmq-cluster/templates/broker/statefulset.yaml

apiversion: apps/v1

kind: statefulset

metadata:

name: rocketmq-broker-replica-id1

namespace: rocketmq

labels:

helm.sh/chart: rocketmq-cluster-12.3.2

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

app.kubernetes.io/version: "5.3.0"

app.kubernetes.io/managed-by: helm

spec:

minreadyseconds: 20

replicas: 1

podmanagementpolicy: orderedready

selector:

matchlabels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: broker

broker: rocketmq-broker-replica-id1

servicename: ""

template:

metadata:

annotations:

checksum/config: 9323bc706d85f980c210e9823264a63548598b649c4935f9db6559d4fecbcc93

labels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: broker

broker: rocketmq-broker-replica-id1

spec:

affinity:

podantiaffinity:

preferredduringschedulingignoredduringexecution:

- weight: 5

podaffinityterm:

labelselector:

matchlabels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: broker

topologykey: kubernetes.io/hostname

securitycontext:

fsgroup: 3000

runasuser: 3000

containers:

- name: broker

image: "apache/rocketmq:5.3.0"

imagepullpolicy: ifnotpresent

command:

- sh

- /mq-server-start.sh

env:

- name: my_pod_name

valuefrom:

fieldref:

fieldpath: metadata.name

- name: rocketmq_process_role

value: broker

- name: namesrv_addr

value: rocketmq-nameserver-0.rocketmq-nameserver-headless.rocketmq.svc:9876

- name: rocketmq_conf_brokerid

value: "1"

- name: rocketmq_conf_brokerrole

value: "slave"

- name: rocketmq_java_options_heap

value: -xms1g -xmx1g

ports:

- containerport: 10909

name: vip

protocol: tcp

- containerport: 10911

name: main

protocol: tcp

- containerport: 10912

name: ha

protocol: tcp

resources:

limits:

cpu: 2

memory: 1gi

requests:

cpu: 50m

memory: 512mi

readinessprobe:

failurethreshold: 6

initialdelayseconds: 10

periodseconds: 10

tcpsocket:

port: main

timeoutseconds: 3

lifecycle:

prestop:

exec:

command: ["sh", "-c", "sleep 5; ./mqshutdown broker"]

volumemounts:

- mountpath: /home/rocketmq/logs

name: broker-storage

subpath: rocketmq-broker/logs

- mountpath: /home/rocketmq/store

name: broker-storage

subpath: rocketmq-broker/store

- mountpath: /etc/rocketmq/broker-base.conf

name: broker-base-config

subpath: broker-base.conf

- mountpath: /mq-server-start.sh

name: mq-server-start-sh

subpath: mq-server-start.sh

dnspolicy: clusterfirst

terminationgraceperiodseconds: 30

volumes:

- configmap:

items:

- key: broker-base.conf

path: broker-base.conf

name: rocketmq-server-config

name: broker-base-config

- configmap:

items:

- key: mq-server-start.sh

path: mq-server-start.sh

name: rocketmq-server-config

defaultmode: 0755

name: mq-server-start-sh

volumeclaimtemplates:

- metadata:

name: broker-storage

spec:

accessmodes:

- readwriteonce

storageclassname: local-path

resources:

requests:

storage: "100mi"

- proxy

---

# source: rocketmq-cluster/templates/proxy/deployment.yaml

apiversion: apps/v1

kind: deployment

metadata:

name: "rocketmq-proxy"

namespace: rocketmq

labels:

helm.sh/chart: rocketmq-cluster-12.3.2

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

app.kubernetes.io/version: "5.3.0"

app.kubernetes.io/managed-by: helm

spec:

minreadyseconds: 20

replicas: 2

selector:

matchlabels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: proxy

template:

metadata:

annotations:

checksum/config: 9323bc706d85f980c210e9823264a63548598b649c4935f9db6559d4fecbcc93

labels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: proxy

spec:

affinity:

podantiaffinity:

preferredduringschedulingignoredduringexecution:

- weight: 5

podaffinityterm:

labelselector:

matchlabels:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: proxy

topologykey: kubernetes.io/hostname

securitycontext:

fsgroup: 3000

runasuser: 3000

containers:

- name: proxy

image: "apache/rocketmq:5.3.0"

imagepullpolicy: ifnotpresent

command:

- sh

- /mq-server-start.sh

env:

- name: namesrv_addr

value: rocketmq-nameserver-0.rocketmq-nameserver-headless.rocketmq.svc:9876

- name: rocketmq_process_role

value: proxy

- name: rmq_proxy_config_path

value: /etc/rocketmq/proxy.json

- name: rocketmq_java_options_heap

value: -xms1g -xmx1g

ports:

- name: main

containerport: 8080

protocol: tcp

- name: grpc

containerport: 8081

protocol: tcp

resources:

limits:

cpu: 2

memory: 1gi

requests:

cpu: 100m

memory: 512mi

readinessprobe:

failurethreshold: 6

initialdelayseconds: 10

periodseconds: 10

tcpsocket:

port: main

timeoutseconds: 3

lifecycle:

prestop:

exec:

command: ["sh", "-c", "sleep 5; ./mqshutdown proxy"]

volumemounts:

- mountpath: /mq-server-start.sh

name: mq-server-start-sh

subpath: mq-server-start.sh

- mountpath: /etc/rocketmq/proxy.json

name: proxy-json

subpath: proxy.json

dnspolicy: clusterfirst

terminationgraceperiodseconds: 15

volumes:

- configmap:

items:

- key: mq-server-start.sh

path: mq-server-start.sh

name: rocketmq-server-config

defaultmode: 0755

name: mq-server-start-sh

- configmap:

items:

- key: proxy.json

path: proxy.json

name: rocketmq-server-config

name: proxy-json

---

# source: rocketmq-cluster/templates/proxy/service.yaml

apiversion: v1

kind: service

metadata:

name: rocketmq-proxy

labels:

helm.sh/chart: rocketmq-cluster-12.3.2

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

app.kubernetes.io/version: "5.3.0"

app.kubernetes.io/managed-by: helm

component: proxy

spec:

ports:

- port: 8080

name: main

protocol: tcp

targetport: 8080

- port: 8081

name: grpc

protocol: tcp

targetport: 8081

selector:

app.kubernetes.io/name: rocketmq

app.kubernetes.io/instance: rocketmq

component: proxy

type: "clusterip"

- configmap

---

# source: rocketmq-cluster/templates/configmap.yaml

apiversion: v1

kind: configmap

metadata:

name: rocketmq-server-config

namespace: rocketmq

data:

broker-base.conf: |

deletewhen = 04

filereservedtime = 48

flushdisktype = async_flush

waittimemillsinsendqueue = 1000

brokerclustername = rocketmq-helm

controller-base.conf: |

controllerdlegergroup = group1

enableelectuncleanmaster = false

notifybrokerrolechanged = true

controllerdlegerpeers = n0-rocketmq-controller-0.rocketmq-controller.rocketmq.svc:9878;n1-rocketmq-controller-1.rocketmq-controller.rocketmq.svc:9878;n2-rocketmq-controller-2.rocketmq-controller.rocketmq.svc:9878

controllerstorepath = /home/rocketmq/controller-data

proxy.json: |

{

"rocketmqclustername": "rocketmq-helm"

}

mq-server-start.sh: |

java -version

if [ $? -ne 0 ]; then

echo "[error] missing java runtime"

exit 50

fi

if [ -z "${rocketmq_home}" ]; then

echo "[error] missing env rocketmq_home"

exit 50

fi

if [ -z "${rocketmq_process_role}" ]; then

echo "[error] missing env rocketmq_process_role"

exit 50

fi

export java_home=$(dirname $(dirname $(readlink -f $(which java))))

export classpath=".:${rocketmq_home}/conf:${rocketmq_home}/lib/*:${classpath}"

java_opt="${java_opt} -server"

if [ -n "$rocketmq_java_options_override" ]; then

java_opt="${java_opt} ${rocketmq_java_options_override}"

else

java_opt="${java_opt} -xx:+useg1gc"

java_opt="${java_opt} ${rocketmq_java_options_ext}"

java_opt="${java_opt} ${rocketmq_java_options_heap}"

fi

java_opt="${java_opt} -cp ${classpath}"

export broker_conf_file="$home/broker.conf"

export controller_conf_file="$home/controller.conf"

update_broker_conf() {

local key=$1

local value=$2

sed -i "/^${key} *=/d" ${broker_conf_file}

echo "${key} = ${value}" >> ${broker_conf_file}

}

init_broker_role() {

if [ "${rocketmq_conf_brokerrole}" = "slave" ]; then

update_broker_conf "brokerrole" "slave"

elif [ "${rocketmq_conf_brokerrole}" = "sync_master" ]; then

update_broker_conf "brokerrole" "sync_master"

else

update_broker_conf "brokerrole" "async_master"

fi

if echo "${rocketmq_conf_brokerid}" | grep -e '^[0-9]+$'; then

update_broker_conf "brokerid" "${rocketmq_conf_brokerid}"

fi

}

init_broker_conf() {

rm -f ${broker_conf_file}

cp /etc/rocketmq/broker-base.conf ${broker_conf_file}

echo "" >> ${broker_conf_file}

echo "# generated config" >> ${broker_conf_file}

broker_name_seq=${hostname##*-}

if [ -n "$my_pod_name" ]; then

broker_name_seq=${my_pod_name##*-}

fi

update_broker_conf "brokername" "broker-g${broker_name_seq}"

if [ "$enablecontrollermode" != "true" ]; then

init_broker_role

fi

echo "[exec] cat ${broker_conf_file}"

cat ${broker_conf_file}

}

init_acl_conf() {

if [ -f /etc/rocketmq/acl/plain_acl.yml ]; then

rm -f "${rocketmq_home}/conf/plain_acl.yml"

ln -sf "/etc/rocketmq/acl" "${rocketmq_home}/conf/acl"

fi

}

init_controller_conf() {

rm -f ${controller_conf_file}

cp /etc/rocketmq/base-cm/controller-base.conf ${controller_conf_file}

controllerdlegerselfid="n${hostname##*-}"

if [ -n "$my_pod_name" ]; then

controllerdlegerselfid="n${my_pod_name##*-}"

fi

sed -i "/^controllerdlegerselfid *=/d" ${controller_conf_file}

echo "controllerdlegerselfid = ${controllerdlegerselfid}" >> ${controller_conf_file}

cat ${controller_conf_file}

}

if [ "$rocketmq_process_role" = "broker" ]; then

init_broker_conf

init_acl_conf

set -x

java ${java_opt} org.apache.rocketmq.broker.brokerstartup -c ${broker_conf_file}

elif [ "$rocketmq_process_role" = "controller" ]; then

init_controller_conf

set -x

java ${java_opt} org.apache.rocketmq.controller.controllerstartup -c ${controller_conf_file}

elif [ "$rocketmq_process_role" = "nameserver" ] || [ "$rocketmq_process_role" = "mqnamesrv" ]; then

set -x

if [ "$enablecontrollerinnamesrv" = "true" ]; then

init_controller_conf

java ${java_opt} org.apache.rocketmq.namesrv.namesrvstartup -c ${controller_conf_file}

else

java ${java_opt} org.apache.rocketmq.namesrv.namesrvstartup

fi

elif [ "$rocketmq_process_role" = "proxy" ]; then

set -x

if [ -f $rmq_proxy_config_path ]; then

java ${java_opt} org.apache.rocketmq.proxy.proxystartup -pc $rmq_proxy_config_path

else

java ${java_opt} org.apache.rocketmq.proxy.proxystartup

fi

else

echo "[error] missing env rocketmq_process_role"

exit 50

fi

踩坑

- 存储权限问题

配置完pv后启动一直报错。

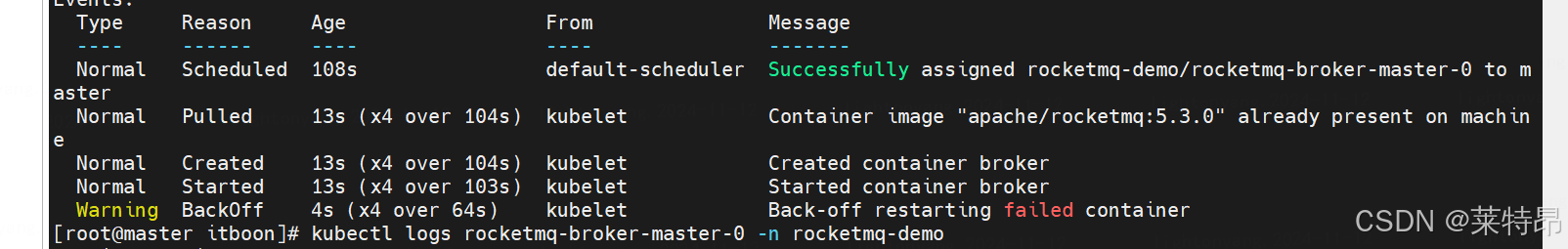

查日志:

kubectl describe pod rocketmq-broker-master-0 -n rocketmq-demo

结果:

查应用启动日志:

kubectl logs rocketmq-broker-master-0 -n rocketmq-demo

结果:

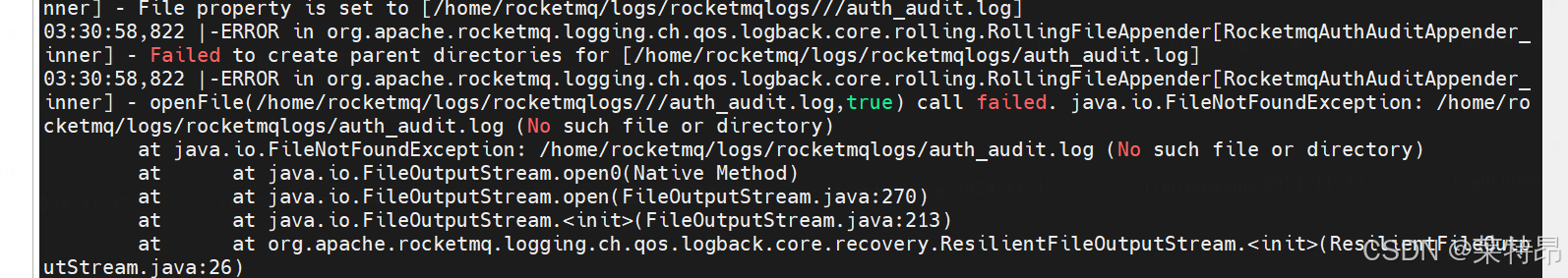

具体错误信息:

03:30:58,822 |-error in org.apache.rocketmq.logging.ch.qos.logback.core.rolling.rollingfileappender[rocketmqauthauditappender_inner] - failed to create parent directories for [/home/rocketmq/logs/rocketmqlogs/auth_audit.log]

03:30:58,822 |-error in org.apache.rocketmq.logging.ch.qos.logback.core.rolling.rollingfileappender[rocketmqauthauditappender_inner] - openfile(/home/rocketmq/logs/rocketmqlogs///auth_audit.log,true) call failed. java.io.filenotfoundexception: /home/rocketmq/logs/rocketmqlogs/auth_audit.log (no such file or directory)

at java.io.filenotfoundexception: /home/rocketmq/logs/rocketmqlogs/auth_audit.log (no such file or directory)

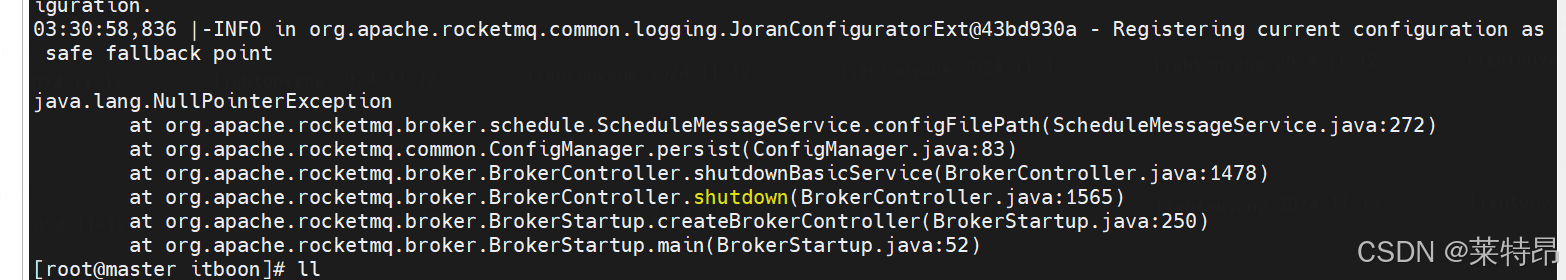

java.lang.nullpointerexception

at org.apache.rocketmq.broker.schedule.schedulemessageservice.configfilepath(schedulemessageservice.java:272)

at org.apache.rocketmq.common.configmanager.persist(configmanager.java:83)

at org.apache.rocketmq.broker.brokercontroller.shutdownbasicservice(brokercontroller.java:1478)

at org.apache.rocketmq.broker.brokercontroller.shutdown(brokercontroller.java:1565)

at org.apache.rocketmq.broker.brokerstartup.createbrokercontroller(brokerstartup.java:250)

at org.apache.rocketmq.broker.brokerstartup.main(brokerstartup.java:52)

网上查了下是挂载的本地目录,pod没有权限读写。

解决的方式:

1、移出root目录

由于是root用户账号,k8s启动用的kubectl账号,把挂载的目录移到了/tmp,修改上文pv文件。

2、提前创建pv目录

在/tmp目录下创建/tmp/storage,不然启动会报pvc没有该目录

3、chmod开启目录及子目录的读写权限,需要带上-r递归修改所有子目录

chmod -r 777 storage

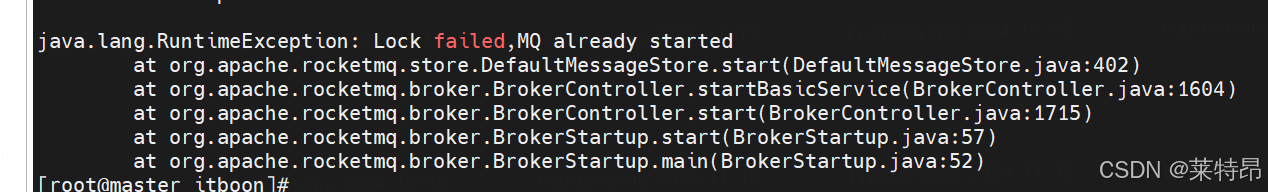

- 主从副本问题

修改完文件权限启动后又报以下错误:

而且broker一主一从,一个正常启动,另一个报这个错误。网上查了下,正常会出现在同一个机器部署了两个broker的情况下。

但我们的环境是k8s集群,节点之间理应是隔离的,所以猜想是storage挂载了同一个目录的问题,修改pv,两个pv挂载的目录不同,改为storageslave。再次启动后成功。

- 命名空间问题

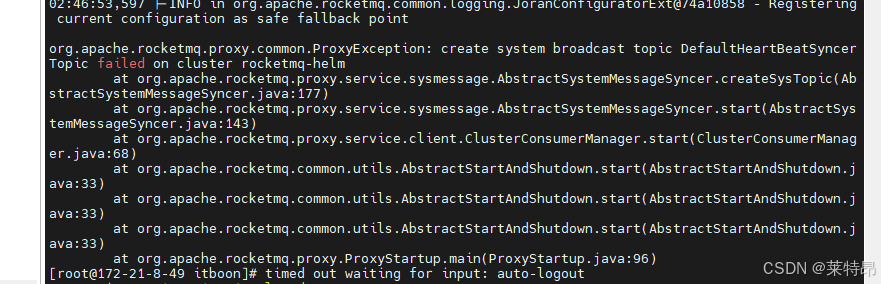

转移到开发环境后报,启动broker和namesrver正常,但proxy启动不了,报:

org.apache.rocketmq.proxy.common.proxyexception: create system broadcast topic defaultheartbeatsyncertopic failed on cluster rocketmq-helm

在本地环境转完yaml启动时没报过。

网上查了下,如果broker没正确配置nameserver,也会报这个错误。怀疑是环境变了后,某些配置需要根据环境修改。把目录下的配置都仔细研究了下,尤其涉及broker和proxy的nameserver地址的。

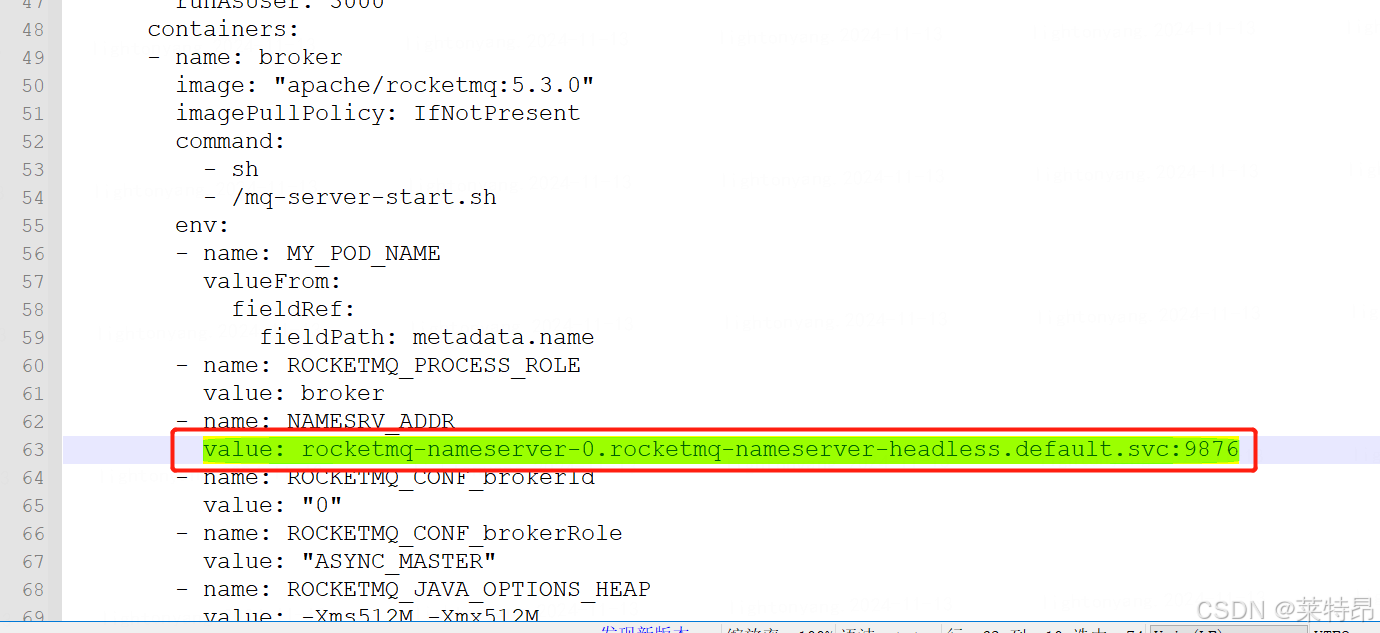

还有个地方有差异,由于开发环境多人共用,有许多的应用在跑,而导出的yaml文件会默认在k8s的default namespace启动pod,容易造成混乱和不好管理。所以尝试在yaml文件中加入了namespace:rocketmq。

最后排查确实由于这个导致,在proxy和broker的配置文件中,还有这句读取nameserver地址的语句:

需要将其中的:

value: rocketmq-nameserver-0.rocketmq-nameserver-headless.default.svc:9876

改为:

value: rocketmq-nameserver-0.rocketmq-nameserver-headless.rocketmq.svc:9876

这个环境变量提供了 rocketmq nameserver 的地址和端口。rocketmq-nameserver-0.rocketmq-nameserver-headless.default.svc 是 nameserver pod 的 dns 名称,9876 是 nameserver 服务的端口。这个地址用于客户端或 broker 连接到 nameserver,以便进行服务发现和元数据同步。

其中,rocketmq-nameserver-0是当前nameserver的name,rocketmq-nameserver-headless对应headless service的name,default对应namespace。所以部署新的k8s命名空间后,需要也把这里的default改为rocketmq的namespace,否则就会报找不到无法创建topit的错误。不过,这里挺奇怪的,broker能正常启动,只有启动proxy的时候才会报这个错误,估计rocketmq5新版本做了什么修改。

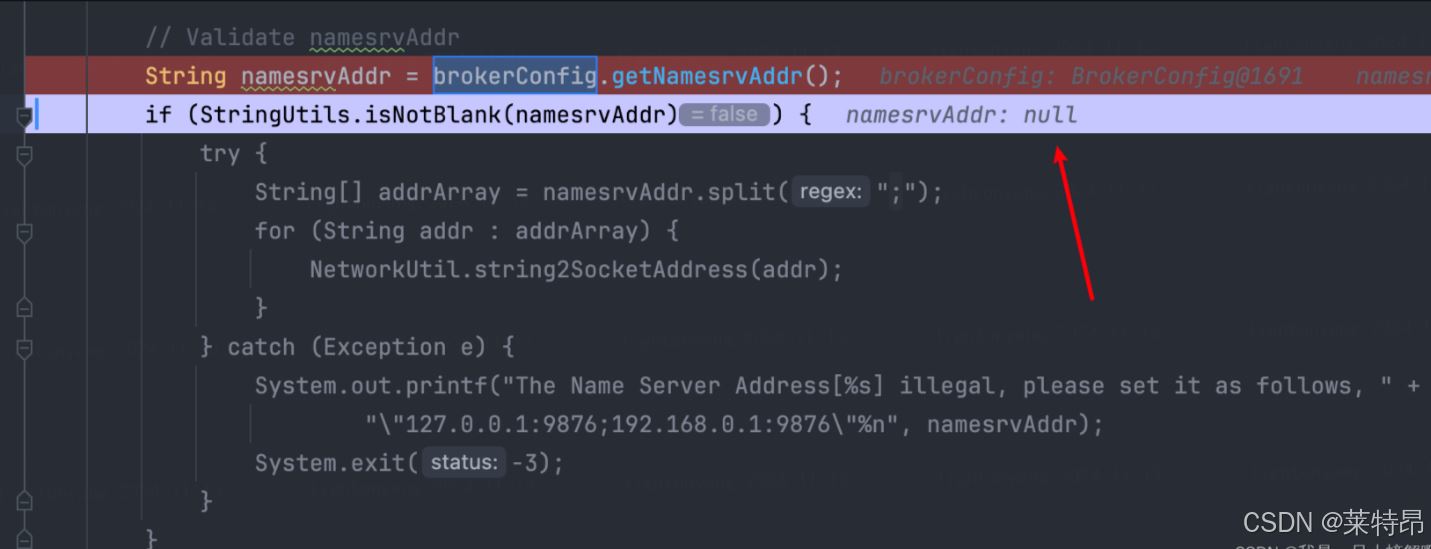

看了别人debug启动源码,指出是这里的问题:

在brokerstartup.java阅读发现这个是namesrv的地址,如果不添加的话,会导致即使你启动了broker,但其实并不会在namesrv上有任何注册信息。

如果不配置会发生什么呢,主要体现在proxy启动的时候,就一定会报错

create system broadcast topic defaultheartbeatsyncertopic failed on cluster defaultcluster

总结

以上为个人经验,希望能给大家一个参考,也希望大家多多支持代码网。

您想发表意见!!点此发布评论

发表评论