Redis实现集群搭建+集群读写的示例

141人参与 • 2025-02-28 • Redis

问题

容量不够,redis 如何进行扩容?并发写操作, redis 如何分摊?另外,主从模式,薪火相传模式,主机宕机,导致 ip 地址发生变化,应用程序中配置需要修改对应的主机地址、端口等信息。

之前通过代理主机来解决,但是 redis7.0 中提供了解决方案。就是 无中心化集群 配置:即每个节点都可以和其他节点进行联系。如 a、b、c 节点。想访问 b 节点,可以先访问 a 节点,a 节点会去联系 b 节点。无须代理服务器或者负载均衡去找对应的节点

什么是集群

redis 集群实现了对 redis 的水平扩容,即启动 n 个 redis 节点,将整个数据库分布存储在这 n 个节点中,每个节点存储总数据的 1/n。

redis 集群通过分区(partition)来提供一定程度的可用性(availability):即使集群中有一部分节点失效或者无法进行通讯,集群也可以继续处理命令请求。

环境准备

- 将 rdb、aof 文件都删除掉

- 三台虚拟机,新建目录/myredis/cluster

- 制作 6 个实例,三主三从,三主机端口分别为 6381,6383,6385。三从机端口分别为6382,6384,6386(具体端口视情况)

- 每一个配置文件的基本信息修改(和 主从复制 - 哨兵模式 一样)

- 开启 daemonize yes

- 指定 6 个端口,不能重复

- 6 个 pid 文件名字配置,不能重复,尽量以加上端口进行识别

- 6 个 log 文件名字,不能重复,尽量以加上端口进行识别

- 6 个 dump.rdb 名字,不能重复,尽量以加上端口进行识别

- appendonly 关掉或者换名字

- 每一个配置文件的集群信息修改

- cluster-enabled yes:打开集群模式

- cluster-config-file nodes-6379.conf:设定节点配置文件名

- cluster-node-timeout 15000:设定节点失联时间,超过该时间(毫秒),集群自动进行主从切换

配置文件模板(替换端口号):

[root@redis-cluster2 cluster]# cat rediscluster6383.conf bind 0.0.0.0 daemonize yes protected-mode no logfile "/myredis/cluster/cluster6383.log" pidfile /myredis/cluster6383.pid dir /myredis/cluster dbfilename dump6383.rdb appendonly yes appendfilename "appendonly6383.aof" requirepass 111111 masterauth 111111 port 6383 cluster-enabled yes cluster-config-file nodes-6383.conf cluster-node-timeout 5000

将以上配置文件分别写入到三台虚拟机中,每个虚拟机其两个redis服务,使用redis-server指定配置文件启动

[root@redis-cluster2 cluster]# redis-server /myredis/cluster/rediscluster6383.conf [root@redis-cluster2 cluster]# redis-server /myredis/cluster/rediscluster6384.conf [root@redis-cluster2 cluster]# ps -ef | grep redis root 1950 1 0 15:38 ? 00:00:00 redis-server 0.0.0.0:6383 [cluster] root 1956 1 0 15:38 ? 00:00:00 redis-server 0.0.0.0:6384 [cluster] root 1962 1569 0 15:38 pts/0 00:00:00 grep --color=auto redis

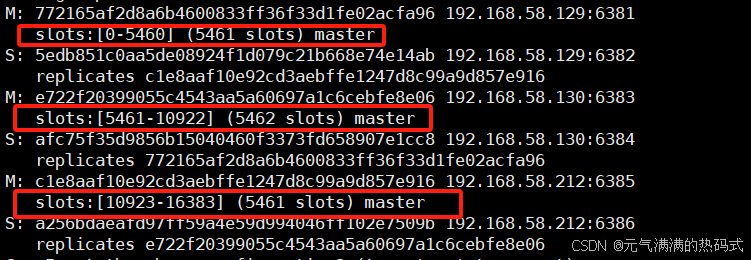

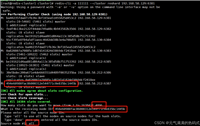

通过命令redis-cli为集群构建主从关系

[root@k8s-master01 cluster]# redis-cli -a 111111 --cluster create --cluster-replicas 1 192.168.58.129:6381 192.168.58.129:6382 192.168.58.130:6383 192.168.58.130:6384 192.168.58.212:6385 192.168.58.212:6386 warning: using a password with '-a' or '-u' option on the command line interface may not be safe. >>> performing hash slots allocation on 6 nodes... master[0] -> slots 0 - 5460 master[1] -> slots 5461 - 10922 master[2] -> slots 10923 - 16383 adding replica 192.168.58.130:6384 to 192.168.58.129:6381 adding replica 192.168.58.212:6386 to 192.168.58.130:6383 adding replica 192.168.58.129:6382 to 192.168.58.212:6385 m: 772165af2d8a6b4600833ff36f33d1fe02acfa96 192.168.58.129:6381 slots:[0-5460] (5461 slots) master s: 5edb851c0aa5de08924f1d079c21b668e74e14ab 192.168.58.129:6382 replicates c1e8aaf10e92cd3aebffe1247d8c99a9d857e916 m: e722f20399055c4543aa5a60697a1c6cebfe8e06 192.168.58.130:6383 slots:[5461-10922] (5462 slots) master s: afc75f35d9856b15040460f3373fd658907e1cc8 192.168.58.130:6384 replicates 772165af2d8a6b4600833ff36f33d1fe02acfa96 m: c1e8aaf10e92cd3aebffe1247d8c99a9d857e916 192.168.58.212:6385 slots:[10923-16383] (5461 slots) master s: a256bdaeafd97ff59a4e59d994046ff102e7509b 192.168.58.212:6386 replicates e722f20399055c4543aa5a60697a1c6cebfe8e06 can i set the above configuration? (type 'yes' to accept): yes >>> nodes configuration updated >>> assign a different config epoch to each node >>> sending cluster meet messages to join the cluster waiting for the cluster to join . >>> performing cluster check (using node 192.168.58.129:6381) m: 772165af2d8a6b4600833ff36f33d1fe02acfa96 192.168.58.129:6381 slots:[0-5460] (5461 slots) master 1 additional replica(s) s: afc75f35d9856b15040460f3373fd658907e1cc8 192.168.58.130:6384 slots: (0 slots) slave replicates 772165af2d8a6b4600833ff36f33d1fe02acfa96 s: 5edb851c0aa5de08924f1d079c21b668e74e14ab 192.168.58.129:6382 slots: (0 slots) slave replicates c1e8aaf10e92cd3aebffe1247d8c99a9d857e916 m: e722f20399055c4543aa5a60697a1c6cebfe8e06 192.168.58.130:6383 slots:[5461-10922] (5462 slots) master 1 additional replica(s) s: a256bdaeafd97ff59a4e59d994046ff102e7509b 192.168.58.212:6386 slots: (0 slots) slave replicates e722f20399055c4543aa5a60697a1c6cebfe8e06 m: c1e8aaf10e92cd3aebffe1247d8c99a9d857e916 192.168.58.212:6385 slots:[10923-16383] (5461 slots) master 1 additional replica(s) [ok] all nodes agree about slots configuration. >>> check for open slots... >>> check slots coverage... [ok] all 16384 slots covered. [root@k8s-master01 cluster]# ll total 52 drwxr-xr-x. 2 root root 4096 dec 1 15:30 appendonlydir -rw-r--r--. 1 root root 10718 dec 1 15:50 cluster6381.log -rw-r--r--. 1 root root 15205 dec 1 15:50 cluster6382.log -rw-r--r--. 1 root root 171 dec 1 15:49 dump6382.rdb -rw-r--r--. 1 root root 799 dec 1 15:49 nodes-6381.conf -rw-r--r--. 1 root root 811 dec 1 15:49 nodes-6382.conf -rw-r--r--. 1 root root 347 dec 1 15:32 rediscluster6381.conf -rw-r--r--. 1 root root 346 dec 1 15:32 rediscluster6382.conf

以6381为切入点查看集群状态

[root@k8s-master01 cluster]# redis-cli -a 111111 -p 6381 warning: using a password with '-a' or '-u' option on the command line interface may not be safe. 127.0.0.1:6381> 127.0.0.1:6381> info replication # replication role:master connected_slaves:1 slave0:ip=192.168.58.130,port=6384,state=online,offset=224,lag=0 master_failover_state:no-failover master_replid:6955624b397904832e2911972f1c9b871e8b86fc master_replid2:0000000000000000000000000000000000000000 master_repl_offset:224 second_repl_offset:-1 repl_backlog_active:1 repl_backlog_size:1048576 repl_backlog_first_byte_offset:1 repl_backlog_histlen:224 127.0.0.1:6381>

集群指令

查看集群信息

127.0.0.1:6381> cluster nodes afc75f35d9856b15040460f3373fd658907e1cc8 192.168.58.130:6384@16384 slave 772165af2d8a6b4600833ff36f33d1fe02acfa96 0 1733039642782 1 connected 5edb851c0aa5de08924f1d079c21b668e74e14ab 192.168.58.129:6382@16382 slave c1e8aaf10e92cd3aebffe1247d8c99a9d857e916 0 1733039641297 5 connected e722f20399055c4543aa5a60697a1c6cebfe8e06 192.168.58.130:6383@16383 master - 0 1733039641617 3 connected 5461-10922 a256bdaeafd97ff59a4e59d994046ff102e7509b 192.168.58.212:6386@16386 slave e722f20399055c4543aa5a60697a1c6cebfe8e06 0 1733039641067 3 connected c1e8aaf10e92cd3aebffe1247d8c99a9d857e916 192.168.58.212:6385@16385 master - 0 1733039640849 5 connected 10923-16383 772165af2d8a6b4600833ff36f33d1fe02acfa96 192.168.58.129:6381@16381 myself,master - 0 1733039639000 1 connected 0-5460

查看键的插槽值

cluster keyslot k1 192.168.58.129:6381> cluster keyslot k1 (integer) 12706 192.168.58.129:6381> cluster keyslot k2 (integer) 449

查看插槽值里有几个key

cluster countkeysinslot 4847 #只能看自己插槽的值

查询集群中的值

cluster getkeysinslot <slot> <count> #返回 count 个 slot 槽中的键

查看单节点

127.0.0.1:6381> cluster info cluster_state:ok cluster_slots_assigned:16384 cluster_slots_ok:16384 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:6 cluster_size:3 cluster_current_epoch:6 cluster_my_epoch:1 cluster_stats_messages_ping_sent:1226 cluster_stats_messages_pong_sent:1199 cluster_stats_messages_sent:2425 cluster_stats_messages_ping_received:1194 cluster_stats_messages_pong_received:1226 cluster_stats_messages_meet_received:5 cluster_stats_messages_received:2425 total_cluster_links_buffer_limit_exceeded:0

集群读写

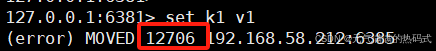

在6381进行写操作时,set k1出现报错,显示到6385进行写,操作,set k2 却可以执行成功,这是为什么呢?

127.0.0.1:6381> set k1 v1 (error) moved 12706 192.168.58.212:6385 127.0.0.1:6381> set k2 v2 ok 127.0.0.1:6381> keys * 1) "k2" 127.0.0.1:6381>

是因为集群在创建时,分配了三个槽位,必须在各自的槽位进行写操作

如何解决?

防止路由失效,添加参数-c并新增两个key

127.0.0.1:6381> flushall ok 127.0.0.1:6381> keys * (empty array) 127.0.0.1:6381> quit [root@k8s-master01 cluster]# redis-cli -a 111111 -p 6381 -c warning: using a password with '-a' or '-u' option on the command line interface may not be safe. 127.0.0.1:6381> 127.0.0.1:6381> 127.0.0.1:6381> keys * (empty array) 127.0.0.1:6381> set k1 v1 -> redirected to slot [12706] located at 192.168.58.212:6385 ok 192.168.58.212:6385> 192.168.58.212:6385> set k2 v2 -> redirected to slot [449] located at 192.168.58.129:6381 ok

到此这篇关于redis实现集群搭建+集群读写的示例的文章就介绍到这了,更多相关redis 集群搭建+集群读写内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

您想发表意见!!点此发布评论

发表评论